vmware

VMware ESXi server 連接 FreeNAS 的免費 iSCSI storage

首先這是 iSCSI 的基本知識 , From wiki : http://en.wikipedia.org/wiki/ISCSI

iSCSI uses TCP/IP (typically TCP ports 860 and 3260). In essence, iSCSI simply allows two hosts to negotiate and then exchange SCSI commands using IP networks. By doing this, iSCSI takes a popular high-performance local storage bus and emulates it over wide-area networks, creating a storage area network (SAN). Unlike some SAN protocols, iSCSI requires no dedicated cabling; it can be run over existing switching and IP infrastructure. As a result, iSCSI is often seen as a low-cost alternative to Fibre Channel, which requires dedicated infrastructure.

Although iSCSI can communicate with arbitrary types of SCSI devices, system administrators almost always use it to allow server computers (such as database servers) to access disk volumes on storage arrays. iSCSI SANs often have one of two objectives:

Storage consolidation

Organizations move disparate storage resources from servers around their network to central locations, often in data centers; this allows for more efficiency in the allocation of storage. In a SAN environment, a server can be allocated a new disk volume without any change to hardware or cabling.

Disaster recovery

Organizations mirror storage resources from one data center to a remote data center, which can serve as a hot standby in the event of a prolonged outage. In particular, iSCSI SANs allow entire disk arrays to be migrated across a WAN with minimal configuration changes, in effect making storage “routable” in the same manner as network traffic.

以下是基本的名詞解釋

Initiator

Further information: SCSI initiator

An initiator functions as an iSCSI client. An initiator typically serves the same purpose to a computer as a SCSI bus adapter would, except that instead of physically cabling SCSI devices (like hard drives and tape changers), an iSCSI initiator sends SCSI commands over an IP network. An initiator falls into two broad types:

- Software initiator

- A software initiator uses code to implement iSCSI. Typically, this happens in a kernel-resident device driver that uses the existing network card (NIC) and network stack to emulate SCSI devices for a computer by speaking the iSCSI protocol. Software initiators are available for most mainstream operating systems, and this type is the most common mode of deploying iSCSI on computers.

- Hardware initiator

- A hardware initiator uses dedicated hardware, typically in combination with software (firmware) running on that hardware, to implement iSCSI. A hardware initiator mitigates the overhead of iSCSI and TCP processing and Ethernet interrupts, and therefore may improve the performance of servers that use iSCSI.

Host Bus Adapter

An iSCSI host bus adapter (more commonly, HBA) implements a hardware initiator. A typical HBA is packaged as a combination of a Gigabit (or 10 Gigabit) Ethernet NIC, some kind of TCP/IP offload technology (TOE) and a SCSI bus adapter, which is how it appears to the operating system.

An iSCSI HBA can include PCI option ROM to allow booting from an iSCSI target.

Target

iSCSI refers to a storage resource located on an iSCSI server (more generally, one of potentially many instances of iSCSI running on that server) as a “target”. An iSCSI target usually represents hard disk storage. As with initiators, software to provide an iSCSI target is available for most mainstream operating systems.

- Storage array

- In a data center or enterprise environment, an iSCSI target often resides in a large storage array, such as a NetApp filer or an EMC Corporation NS-series computer appliance. A storage array usually provides distinct iSCSI targets for numerous clients.[1]

- Software target

- In a smaller or more specialized setting, mainstream server operating systems (like Linux, Solaris or Windows Server 2008) and some specific-purpose operating systems (like NexentaStor, StarWind iSCSI SAN, FreeNAS, iStorage Server, OpenFiler or FreeSiOS) can provide iSCSI target’s functionality.

Addressing

Special names refer to both iSCSI initiators and targets. iSCSI provides three name-formats:

- iSCSI Qualified Name (IQN)

- Format: iqn.yyyy-mm.{reversed domain name} (e.g. iqn.2001-04.com.acme:storage.tape.sys1.xyz) (Note: there is an optional colon with arbitrary text afterwards. This text is there to help better organize or label resources.)

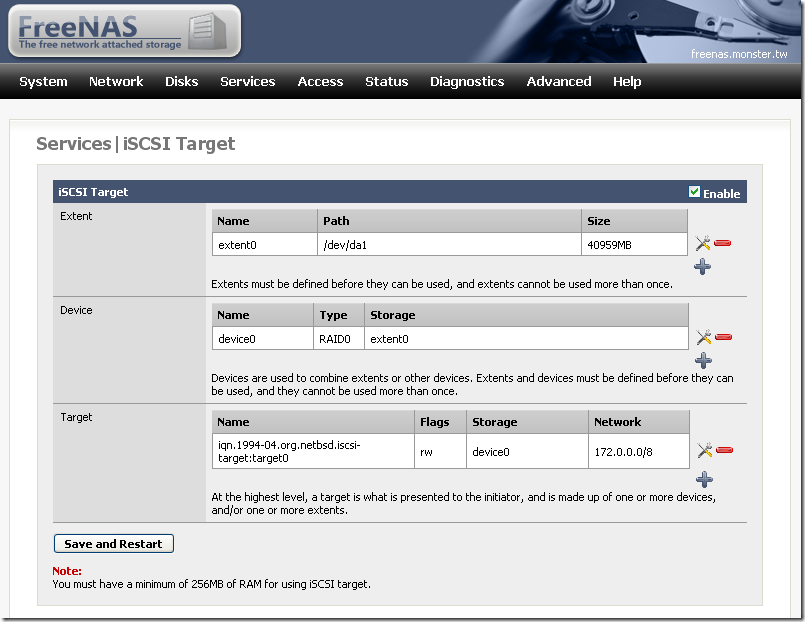

以下是用 FreeNAS 的 step by step ,

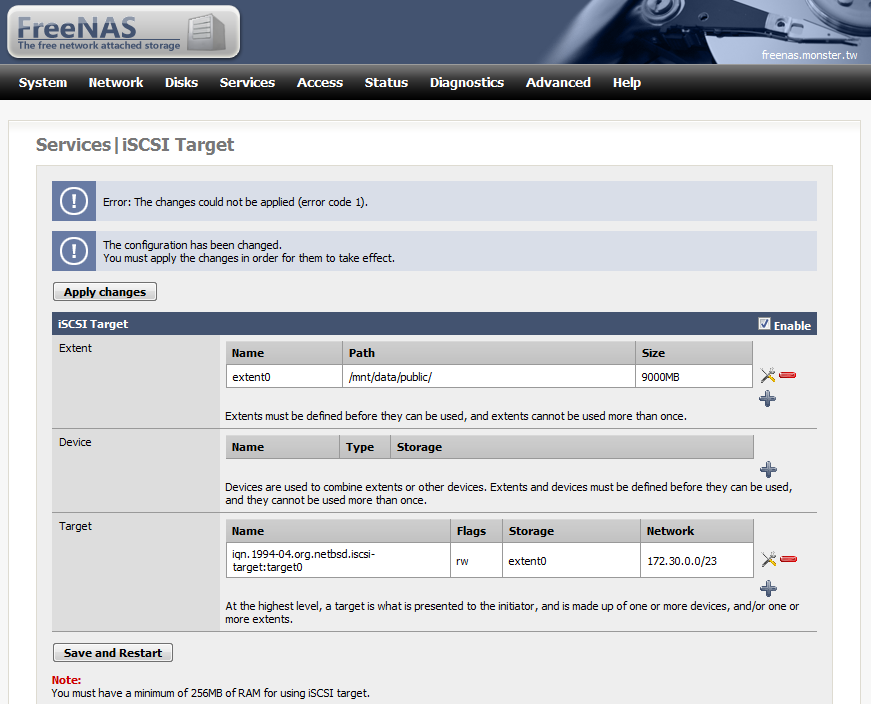

我把一個 hardisk 整個當作一個 iSCSI 的 target (FreeNAS 那邊可以分成用 file 或 device 當 target , 各有好壞 , 當然 device 是效能較好)

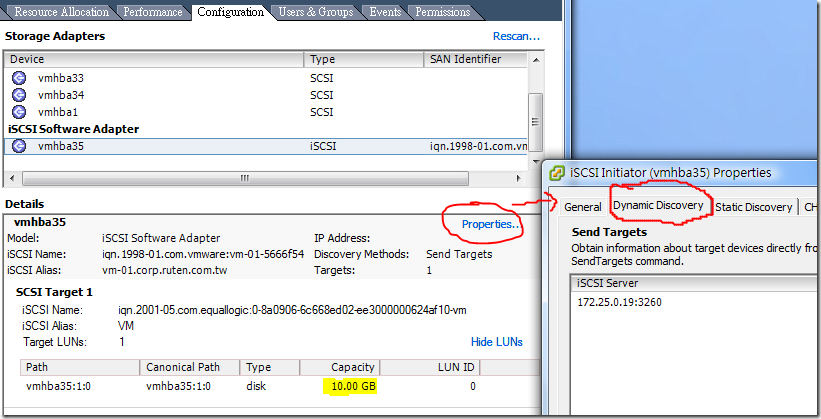

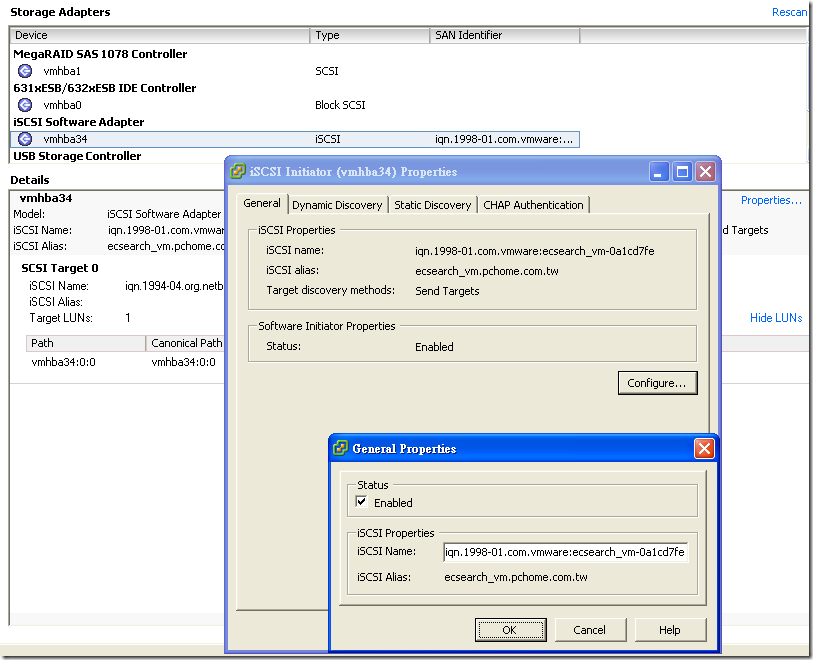

在 VMware ESXi server 那邊要把 iSCSI 的 software adapter enable ( Initiator ):

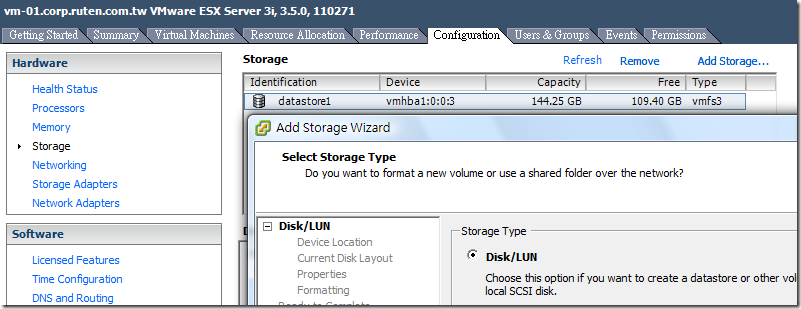

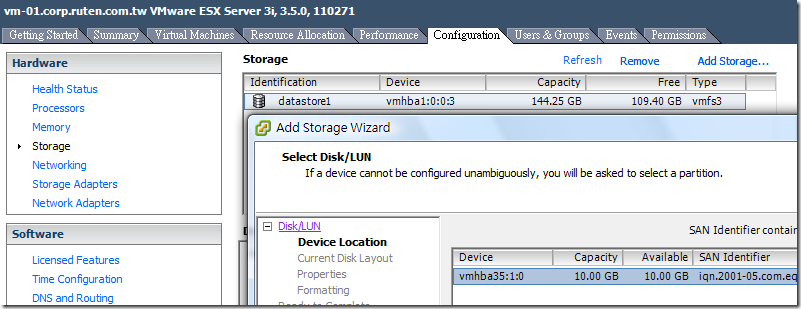

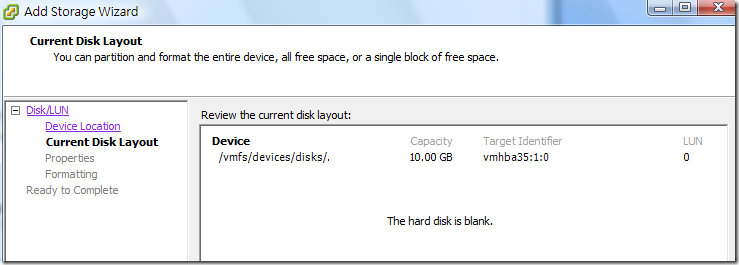

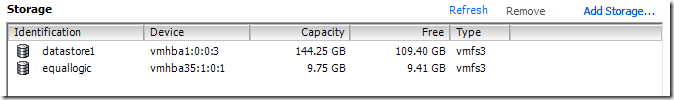

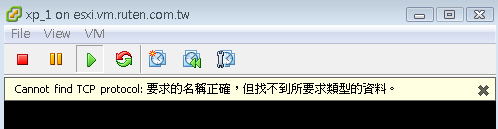

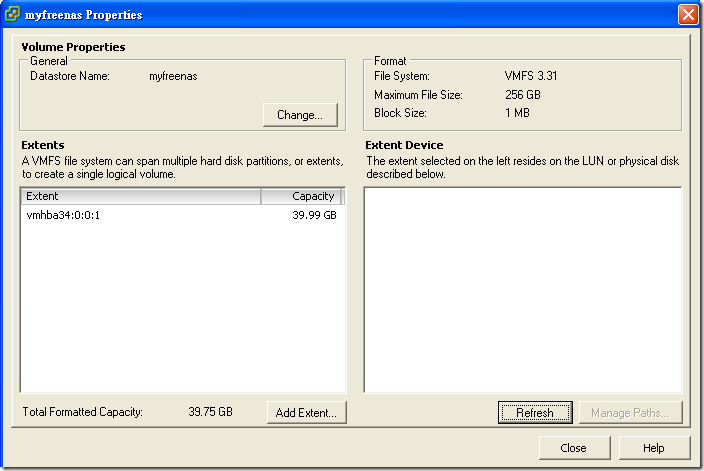

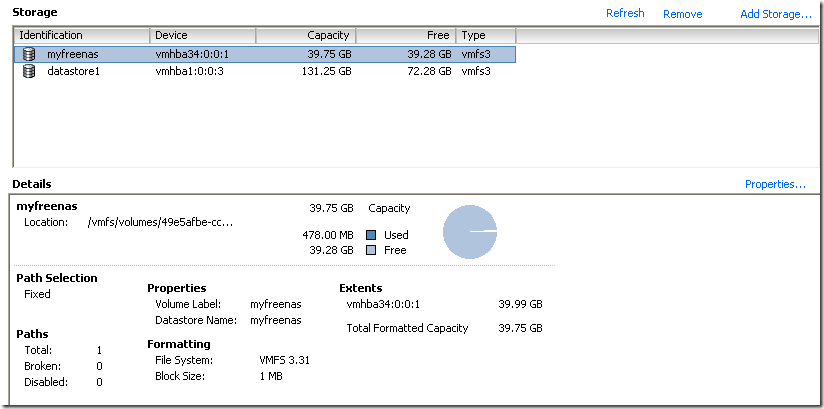

在 Storage 那邊把 FreeNAS 提供的 iSCSI target 加進來 , Windows Vista 可以直接把 taget 加進去, XP 或 其他的 Windows server 要裝 Microsoft 所題供的 iSCSI software 才行.

不過我發現 iSCSI 的 extent 用 file 的會有 error : Error: The changes could not be applied (error code1).

vmware 也蠻喜歡改名字的 VMware VirtualCenter 改叫 vCenter

新的 VMware converter 叫 vCenter Converter Standalone , 看起來要找目前程式的升級版就通通改到 vCenter 那邊找.

網站贊助廣告版位出租 – Pagerank 5 / 10 , SEO

廣告特色 : 本站 www.monster.com.tw 目前在 Google pagerank 是 5/10, 本位置廣告可以增加您的網站 SEO.

廣告版位 : 固定每頁都有(參考附圖), 不輪播, 大小為 720px × 90px , 可放一則 banner 或 flash 加上網頁聯結.

廣告價格 : 請看我的賣場!

請參考本站在 Google 排名 2009.0331

關鍵字 oracle :

繁體中文網頁 – 排名第 12

台灣的網頁 – 排名第 7

關鍵字 vmware :

繁體中文網頁 – 排名第 7

台灣的網頁 – 排名第 5

關鍵字 javascript :

繁體中文網頁 – 排名第 33

台灣的網頁 – 排名第 20

關鍵字 codeigniter :

繁體中文網頁 – 排名第 3

台灣的網頁 – 排名第 3

Monster LAMP Pack Lite – ver.317

Basic install emerge

lilo dhcpcd openssh syslog-ng vixie-cron screen ntp cronolog net-mail/mpack app-arch/sharutils unzip bind-tools trafshow traceroute

Linux : 2.6.24-gentoo-r7

Apache : 2.2.10

Mysql : 5.0.70-r1

PHP : 5.2.8-pl2

PHP 的編法是

USE="apache2 berkdb bzip2 calendar cjk cli crypt curl gd gdbm hash iconv json mysql mysqli ncurses nls oci8-instant-client pcre readline reflection session simplexml spell spl ssl truetype unicode xml zlib" emerge -av php

package 有

samba :

postfix :

oracle instant client :

open-vm-tools : vmware 的 tools

emerge 這些 package:

重要軟體:

mysql php apache postfix

中等重要:

screen ntp samba

工具類軟體:

subversion vim open-vm-tools cronolog net-mail/mpack app-arch/sharutils unzip

記得

- /etc/udev/rules.d/70-persistent-net.rule 砍掉

- 改 net_DHCP

- 砍 /tmp/*

- check /etc/conf.d/clock , /etc/hosts , /etc/resolv.conf

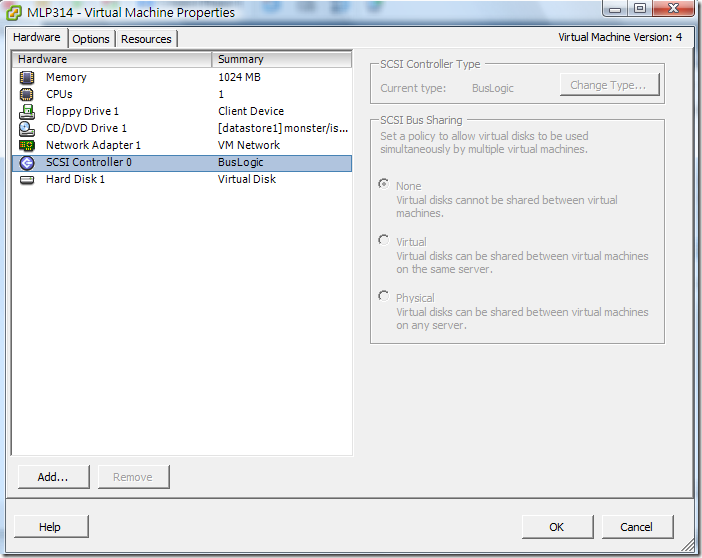

vmware esx server – compile linux kernel / scsi controller/driver problem

直接在 vmware esx server 上重裝一台 gentoo linux 的 steps

在這個地方要勾 BusLogic , 在 make menuconfig 要選 BusLogic (簡直是廢話…)

開機後 dmesg | grep scsi 的結果是

scsi: ***** BusLogic SCSI Driver Version 2.1.16 of 18 July 2002 *****

scsi: Copyright 1995-1998 by Leonard N. Zubkoff <[email protected]>

scsi0: Configuring BusLogic Model BT-958 PCI Wide Ultra SCSI Host Adapter

scsi0: Firmware Version: 5.07B, I/O Address: 0x1060, IRQ Channel: 17/Level

scsi0: PCI Bus: 0, Device: 16, Address: 0xF4800000, Host Adapter SCSI ID: 7

scsi0: Parity Checking: Enabled, Extended Translation: Enabled

scsi0: Synchronous Negotiation: Ultra, Wide Negotiation: Enabled

scsi0: Disconnect/Reconnect: Enabled, Tagged Queuing: Enabled

scsi0: Scatter/Gather Limit: 128 of 128 segments, Mailboxes: 211

scsi0: Driver Queue Depth: 211, Host Adapter Queue Depth: 192

scsi0: Tagged Queue Depth: Automatic, Untagged Queue Depth: 3

scsi0: *** BusLogic BT-958 Initialized Successfully ***

scsi0 : BusLogic BT-958

scsi 0:0:0:0: Direct-Access VMware Virtual disk 1.0 PQ: 0 ANSI: 2

sd 0:0:0:0: Attached scsi generic sg0 type 0

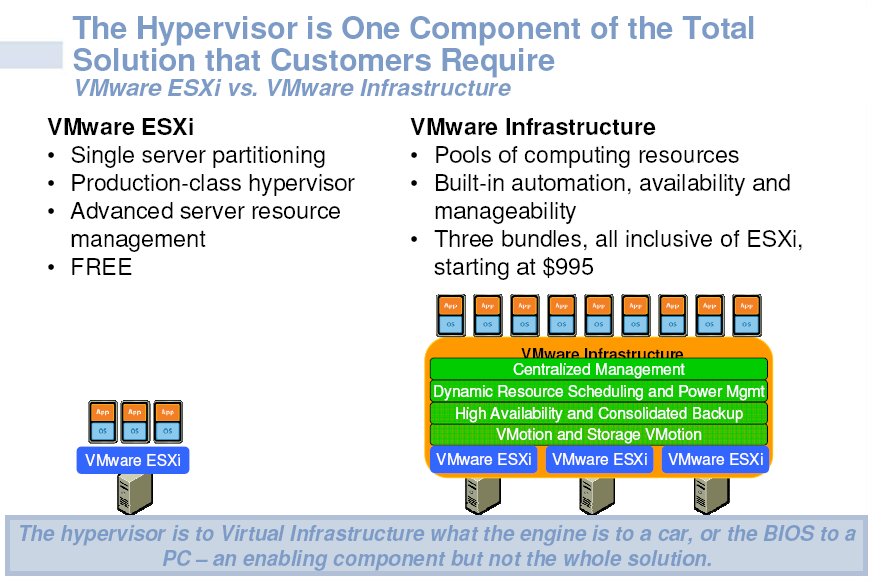

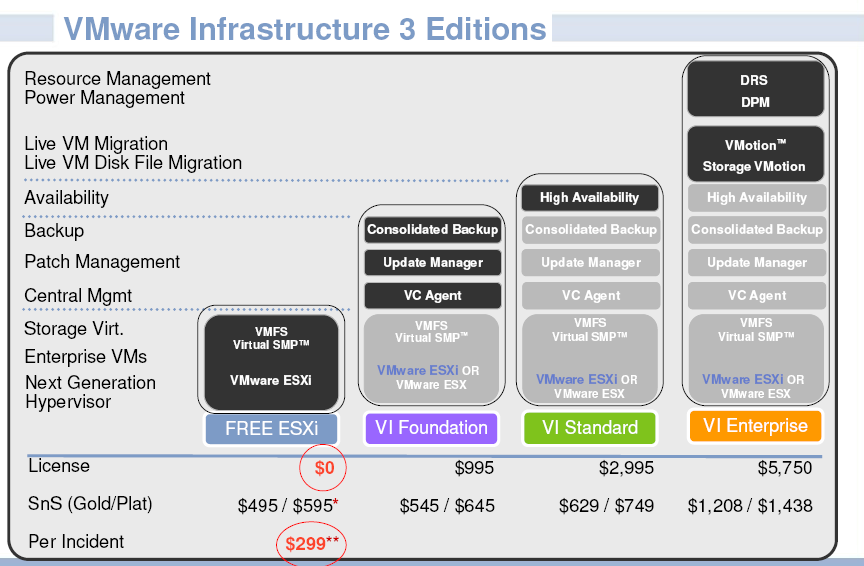

VMware datacenter / ESXi

這個頁面提到VMware 的 virtualization 感覺非常的完整, 真的超級夢幻的 IT datacenter solution 都有了 … 希望有機會可以來試看看

http://www.vmware.com/technology/virtualization-resources.html

ESXi

用 HAProxy 作 load balancer – 窮人的 SLB ( server load balance)

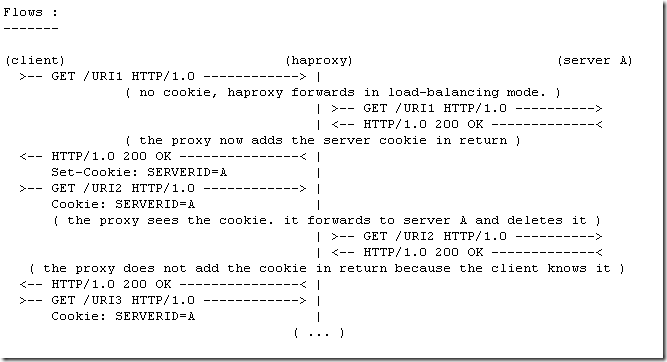

這是簡單版的架構, http flow:

其實, 裝起來並沒有想像中複雜, 只是準備一個測試環境比較麻煩些罷了–> vmware 又幫了我不少忙 😛

參考他的 online document:

http://haproxy.1wt.eu/download/1.3/doc/haproxy-en.txt

及架構圖

http://haproxy.1wt.eu/download/1.3/doc/architecture.txt

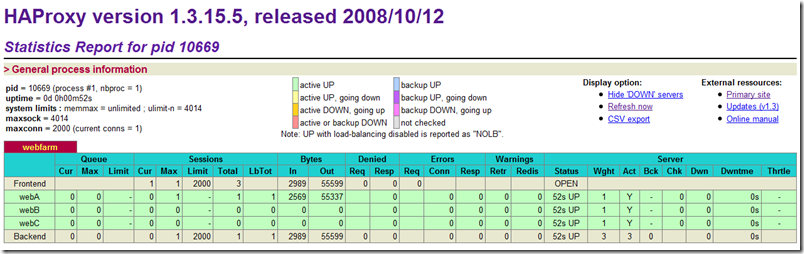

略翻完這兩個文件就可以來測試了 , 首先準備三台 web server : webA 到 webC , 然後還要一台 server 當 haproxy server 這台不用跑 apache , 安裝 haproxy 很簡單, 在 gentoo 就是 emerge –av haproxy , 設定檔要自己建 (放到 /etc/ )

我的 /etc/haproxy.cnf

listen webfarm 172.30.0.235:80 monitor-uri /haproxy_status stats uri /stats stats auth admin:admin mode http balance roundrobin cookie SERVERID insert indirect option httpchk HEAD /index.php HTTP/1.0 server webA 172.30.0.206:80 cookie A check server webB 172.30.0.227:80 cookie B check server webC 172.30.0.228:80 cookie C check

第一次連上後, haproxy 如他的 menual 寫的 , 會丟一個 cookie 給 client , 作為下次要連的實體 server 的依據 , 我把那台 apache 停掉, 果然就連到別去了 , 然後 phpinfo 中值得紀錄的是:

SERVER_NAME 就是 haproxy.cfg 中寫的 listen 的 IP , 然後 , SERVER_ADDR 就是實體連到的 apache 的 IP , haproxy 會不停的丟 HTTP/1.0 的 HEAD 取得 apache 是否還活著.

在 webA-C 的 aapache access log 中若 沒特別改的話, 就是紀錄 haproxy server 的 IP address

….

好了! 實驗完成了!

結論, HAProxy 在他的官網寫說他:

“ 提供一個免費/快速的 HA / LOAD BALANCE 方案 , 可是我覺得他僅能夠說 HA / BALANCE (並沒有很徹底的偵測 server loading) , 並且在 SPF 方面也並沒有很好的解決辦法.

不過呢, 至少 HAProxy 比 DNS roundrobin 還好.

各種 LOAD BALANCE 方案 評估表

|

SLB |

CPU用量 |

轉送效率 |

偵測連線 |

|

roundrobin DNS |

低 |

最佳,直接傳給client |

NO |

|

ipvsadm |

最低 |

高 |

YES |

|

mod_proxy |

高 |

低 |

YES |

|

haproxy |

中 |

中 |

YES |

過了一個晚上想了想 , 即使 Citrix / Alteon / Foundry 這些 SLB hardware device 也沒有做到真正的 loading detective , 所以就一個免費又高容量的 HAProxy 來說, 算是很好的 SLB 解決方案了.

ㄎㄎ 有做過 HAProxy 實驗的都會貼這一張: